Time complexity: The efficiency of code

Time complexity is one of the main ways algorithms in computer science are analyzed. In general, how efficient an algorithm is is based on the number of elementary operations the algorithm performs. Algorithms simply put are functions in code that take inputs, do something with those inputs and obtain outputs. In this way, algorithms are similar to mathematical functions like those learned in school. Applications on computers and phones need to work as efficiently as possible, especially when a lot of users and user data is involved. This is why efficiency becomes crucial and is constantly strived for.

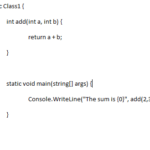

For technical topics like this, it is best to explain using examples.

O(1): constant time

O(1) refers to constant time complexity. The algorithm in the code above takes two integers as inputs, adds those two numbers, and then displays the result in the console. A web-browsers console can be accessed by right-clicking the mouse and clicking inspect. Regardless of the two numbers entered as input, the same number of operations are performed. Ideally, one of the goals of computer science is to have code as close to constant time complexity as possible.

O(n): linear time

O(n) refers to linear time complexity. The above C# code takes the add function from above which has constant time complexity and places it in a for loop that iterates n times, which in this case is 5. It’s clear from analyzing this code that the number of operations performed has a 1:1 relationship with n. If n were doubled or tripled it would perform respectively 2n and 3n operations.

O(log(n)): logarithmic time

O(log(n)) refers to logarithmic time complexity. The above C# code is similar to the code used for the O(n) example. However, the for loop iterates n / 2 times. It’s clear from analyzing this code that the number of operations performed has less than a 1:1 relationship with n. Therefore, the number of operations performed increases with increasing values of n at a slower rate.

O(2^n): exponential time

O(2^n) refers to exponential time complexity. The Fibonacci sequence is a classic example of an algorithm that has a runtime of exponential time. O(2^n) refers to an algorithm where the number of elementary operations performed grows quickly or exponentially with increases in the value n. If the above code is analyzed and tested it becomes apparent that for increasing values of n, the number of operations increases significantly.

To learn more about this topic and other areas of computer science I recommend the website GeekForGeeks.